Joint Advanced Student School - JASS 2016

Software Development for Mobile Platforms and the Internet of Things

Supervisors:

Prof. Kirill Krinkin (JetBrains Research, SPbAU, LETI) and Prof. Bernd Brügge (TUM)

Assistants:

Stephan Krusche (TUM), Mark Zaslavsky (SPbAU), Vladimir Chernokulsky (SPbAU)

March 21 - 27, 2016, St. Petersburg, Russia

About School

|

Students and instructors took part in the Russian German Joint Advanced Student School (JASS) where students developed applications for mobile devices and the Internet of Things. JASS was held from 21 to 27 March 2016 in St. Petersburg, on the basis of JetBrains Research. The purpose of this event was to acquire soft skills in an international team and to become familiar with the latest technologies. The key of the school was intensive development of small projects in 5 mixed teams of 4 people (including 10 students from Russia and 10 students from Germany). Participants were provided with a wide variety of equipment ranging from simple sensors and ending with tablets, smart watches and controllers, as well as all necessary tools for development. The teams were devoted to the development for mobile platforms and the Internet of things, the characteristics of the user interface design for handheld devices (wearable devices) and the best programming practices. While using lightweight agile methods like e.g. daily standup meetings, the teams focused on the rapid development of core features of their project ideas. The results of the five teams can be seen below. |

Multimodel iNTeraction

Overview

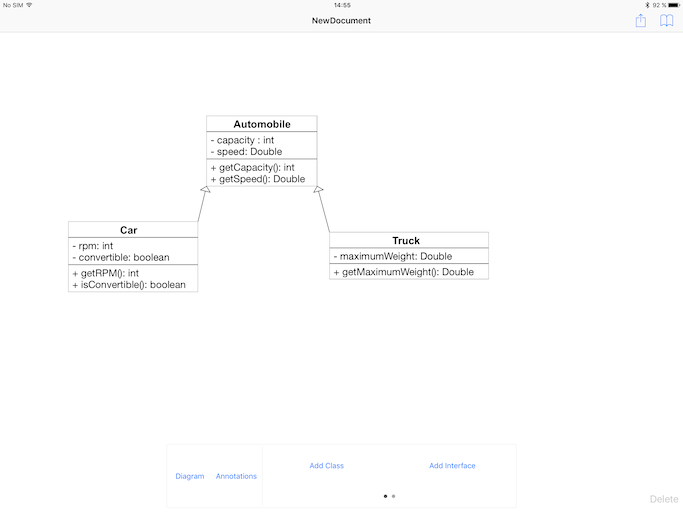

Collaboration is as an important aspect to modeling for having a shared understanding and representation of a complex phenomena. Models are frequently used not only to have a clear understanding of the system or the design space, but also as communication artifacts. (MiNT) is a modeling tool which facilitates real-time collaboration on models during early stage requirements engineering process. MiNT allows to collaborate on the creation and editing of informal models, and enables collaborative modeling sessions across iOS and Android devices. Additionally, MiNT supports the transformation of informal to formal models.

Features

- Cross platform (iOS and Android), real-time informal modeling

- Import existing models and annotate

- Support for Apple Pencil

- Formal modeling using UML Class diagram notation on iOS devices

Technologies

- Mobile Development for iOS and Android

- Peer to Peer Communication

- Real Time Collaboration

Team

|

|

|

|

|

Paul Tolstoi (TUM) |

Nitesh Narayan (TUM) |

Sergey Sokolov (SPbAU) |

Ruslan Thakokhov (SPbAU) |

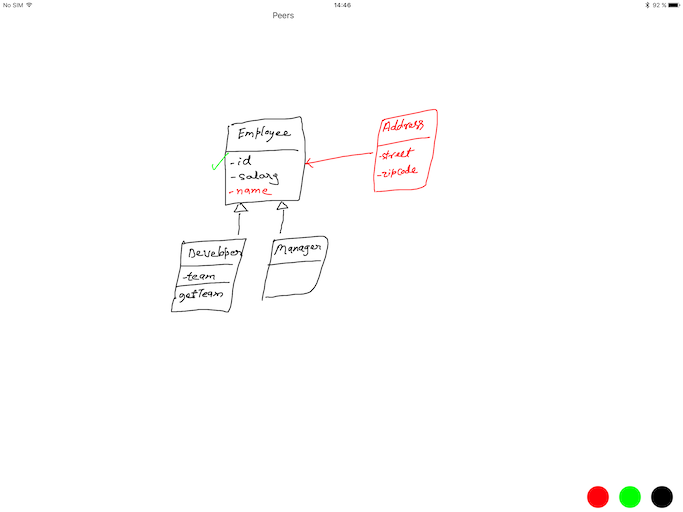

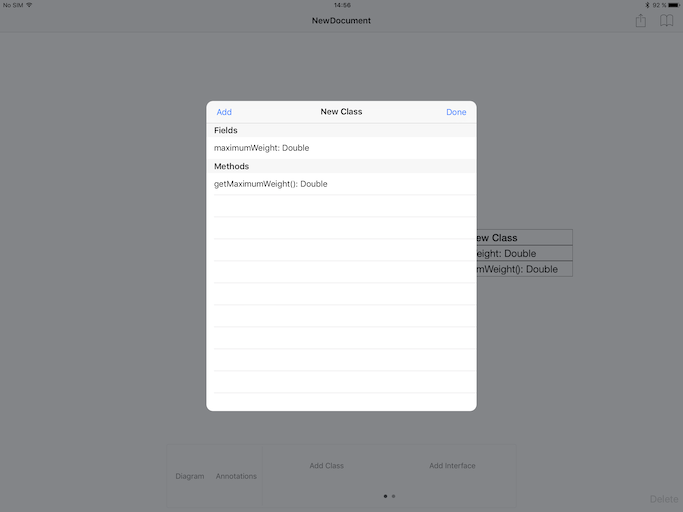

Screenshots

|

|

|

| Support for collaborative informal modeling across multiple devices (iOS and Android, both). Annotate in real-time for refactoring and refinement. Precise input with apple pencil and any other stylus. | Formal Modeling using UML Class diagram notation for iOS platform. Save and load diagrams for model evolution. | Simple dialog to quickly add more details to Object (attributes and behavior). |

Trailer

Quadcopter Autopilot

Overview

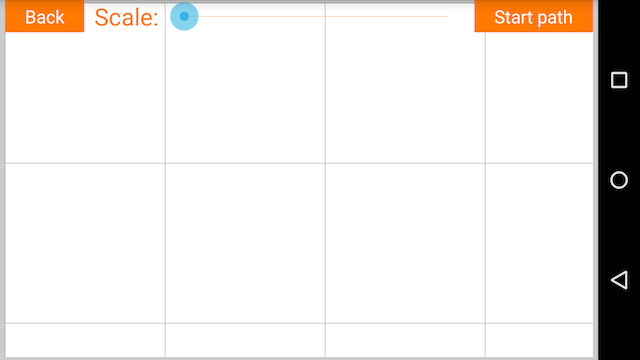

Quadcopter Autopilot is your personal cameraman. It controls a camera-equipped quadcopter and is the perfect companion for extreme sports and other activities. The goal of this project was the creation of automatic hardware / software which allows you to capture dynamic scenes using the quadcopter in several operation modes: target tracking, static post object, path following.

Features

- Sensor-guided precise steering

- Tracking mode: The quadcopter follows you as you go and centers the camera view onto you

- Flight path planning and following: Allows you to draw a path on a map that the quadcopter then follows

Technologies

- Camera

- Quadcopter

- Object Tracking

- Image Recognition

Team

|

|

|

|

|

Dominique d'Argent (TUM) |

Cecil Wöbker (TUM) |

Roman Fedorov (SPbAU) |

Yurii Rebryk (SPbAU) |

Screenshots

|

|

| The drone can fly autonomously following a person or a predefined path. | With computer vision technology, the drone can follow people autonomously. |

|

|

| Users can draw on a simple canvas to plan a mission and guide our drone. | The drone system can act on its own and accomplish complex tasks in environments were human control is not possible. |

Trailer

KneeHapp - Rehabilitation Monitoring on the Wrist

Overview

The rupture of the anterior cruciate ligament (ACL) of the knee is a severe injury that often causes the loss of physical capabilities of the individual and requires up to a year of rehabilitation. It occurs mainly in the young and active population and often professional athletes, for example famous soccer players, suffer ACL injuries.

During the rehabilitation phase, patients have to perform several different exercises in order to recover. They do this mostly unattended at home and only see their doctor every three months. The treatment prescribed by the doctor is then based on the observations from these visits.

KneeHapp is a smart knee bandage developed at TUM, that aims to support the patients performing the exercises correctly and to provide relevant metrics to the doctor to enable better treatment decisions. KneeHapp compromises a knee bandage with two inertial measurement units (IMU) and an iOS App for mobile devices. Based on motion data that is received from the IMUs, the app can display the relevant exercise parameters to the patient in real time and collect necessary data that is useful for the doctor.

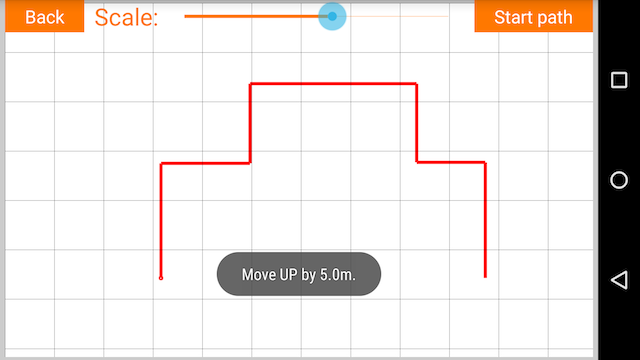

KneeHapp Watch extends this idea using the Apple Watch. The smart watch enables the display of real time data directly on the patient’s wrist, which is a huge improvement over the rather bulky smartphones or even tablets. The patient now can track the relevant parameters while performing the exercise without having to pay attention to the smartphone or tablet, thus enabling him to focus on the exercise without further distraction. In addition, the watch can also control the exercise workflow, so that the patient can easily select another type of exercise without any interruption.

Features

- Supports 6 different exercises for rehabilitation from ACL, for example range of motion exercise, one leg squat, and side hops

- Retrieval and processing of exercise performance data from the knee bandage

- Real time display of relevant exercise parameters on the Apple Watch

- Intuitive visual feedback on exercise performance for the patient

- Control of the exercise workflow directly on the Apple Watch

Technologies

- Mobile Development: iOS 9, watchOS 2

- Smart Watch: Apple Watch

- Signal Processing using Arduino and IMUs Bluetooth Low Energy for communication between knee bandage and iPhone and between Apple Watch and iPhone

Team

|

|

|

|

|

Kirsten Rauffer (TUM) |

Fabian Warthenpfuhl (TUM) |

Roman Belkov (SPbAU) |

Sophia Smolina (SPbAU) |

Screenshots

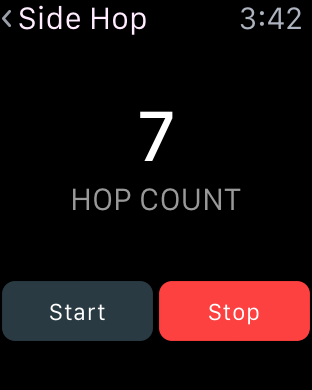

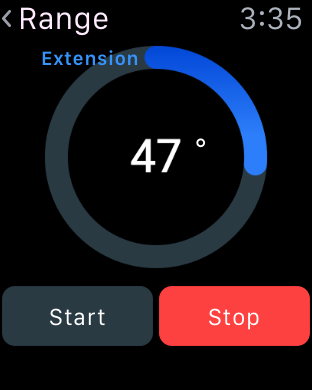

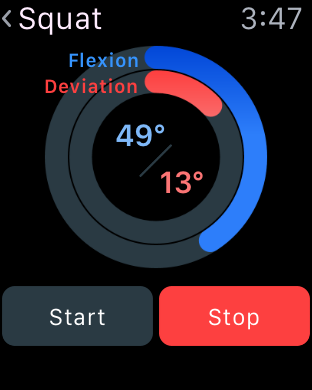

|

|

|

|

|

| The user can control the exercise workflow directly on his wrist. | The app can assist with a simple running exercise by directly starting and stopping the timer depending on the patients movement. | The number of performed hops is counted automatically for the side hop exercise. | For the range of motion exercise the current amount of knee flexion is displayed directly on the watch. | When performing the squat exercise, the patient can observe the flexion as well as the deviation on the watch. |

Trailer

Octopus - Mobile First Responder for Emergencies

Overview

Strokes occur when the blood supply to the brain is interrupted or reduced. When the brain is deprived of the oxygen and nutrients transported in the blood, brain cells start to die. The earlier a stroke victim receives treatment, the higher the chances of her brain to recover without symptoms such as limb paralysis. Octopus is an IoT system for optimizing the treatment of stroke patients. Octopus consists of a set of hardware sensors (e.g. heart rate, breathing, brain activity sensor) and mobile devices (e.g. a tablet device, smart watch) that are used to collect patient data inside the ambulance while the patient is transported to the hospital. Based on the collected data, a report is sent to the hospital so that the adequate resources (personal, rooms, devices) needed for the treatment start to be prepared.

Features

- Sensor set to acquire patient's vital signs

- iPad Interface for first responders to quickly input patient’s health data

- Automatic Patient Condition report generation by integration of patient data

- Infrastructure to facilitate communication between hospital and ambulance

- Remote-monitoring of patient’s vital signs

Technologies

- Hardware development (physiological sensors)

- Mobile development (Apple Watch, iOS development)

- Backend development

- MQTT messaging protocol

Team

|

|

|

|

|

Andreas Seitz (TUM) |

Juan Haladjian (TUM) |

Vladislav Sazanovich (SPbAU) |

Pavel Yurgin (SPbAU) |

Screenshots

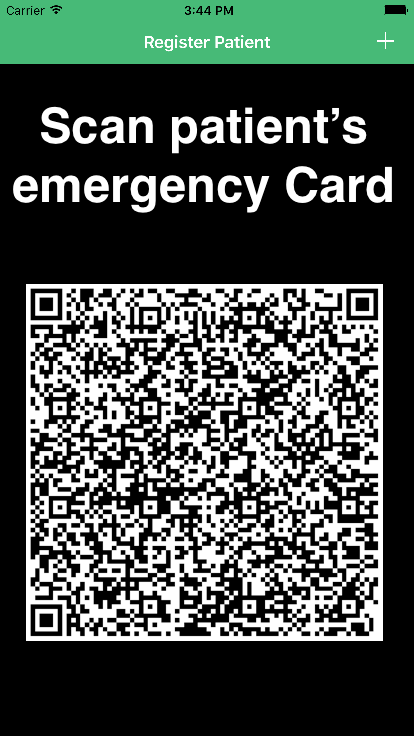

|

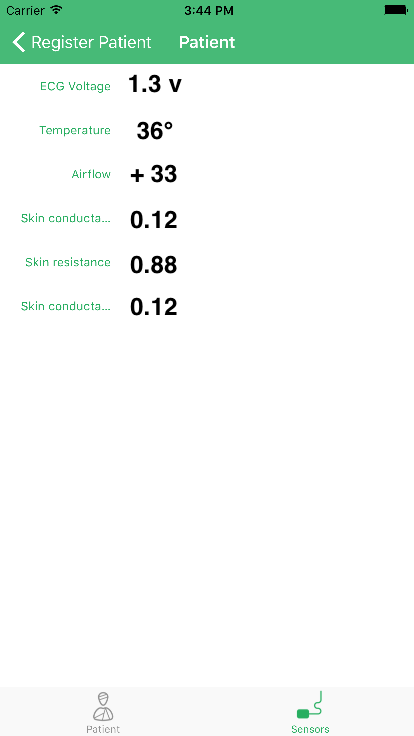

|

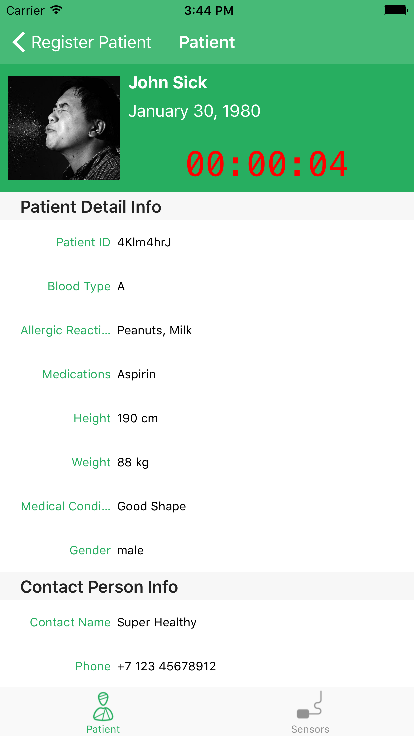

|

| By scanning patient’s Emergency Card with the Octopus App, patients are automatically registered at the hospital | Octopus offers a UI for emergency responders to view and add further information relevant to the patient’s particular condition | Interface to monitor data acquired by sensors |

Trailer

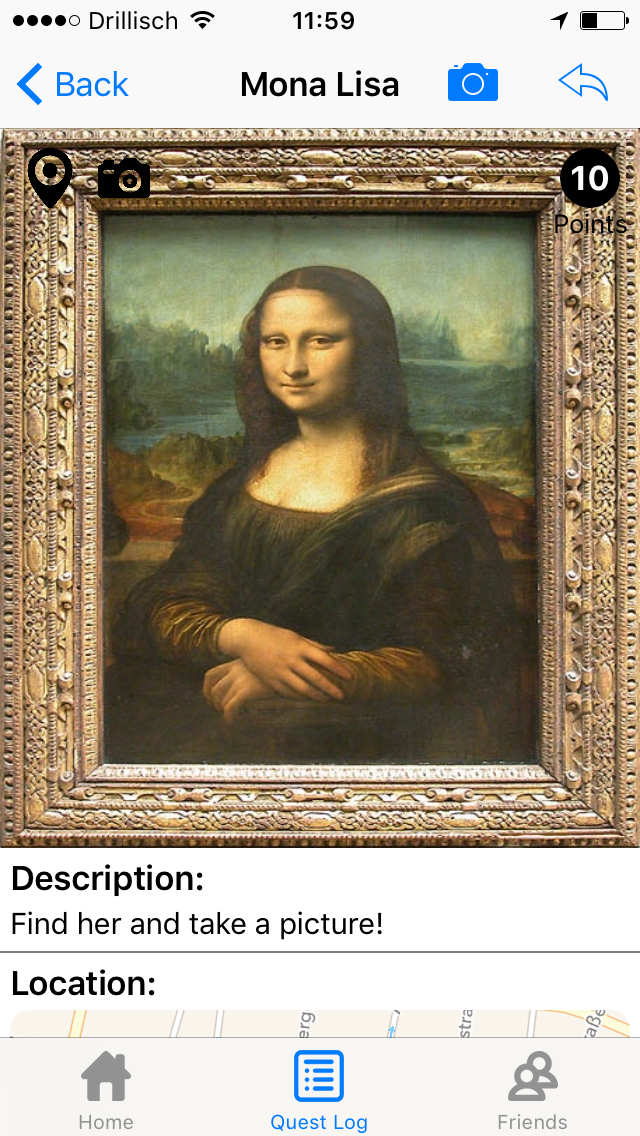

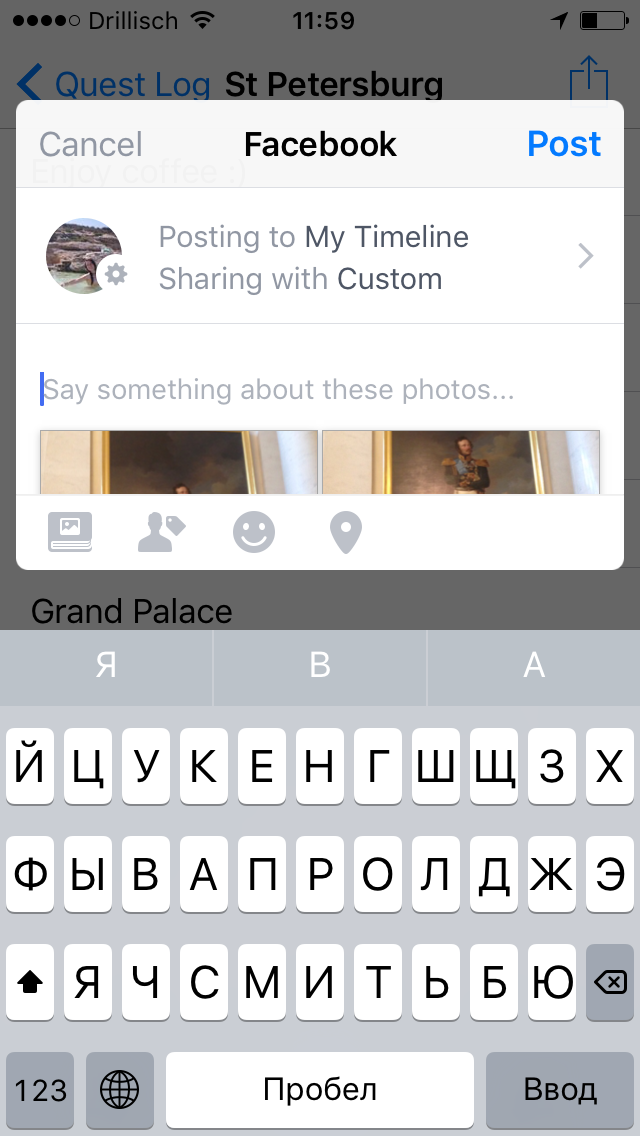

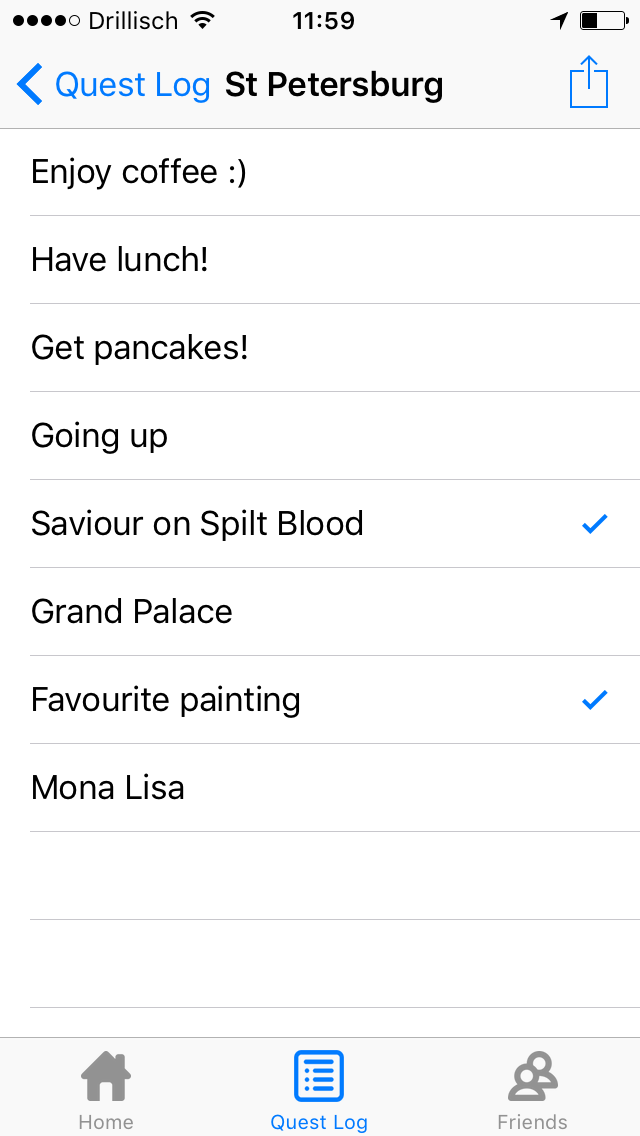

Geo Quest Travel Game

Overview

To travel and to visit new cities - it's great fun, but the trip planning can be complex and time-consuming. Here comes GeoQuest! This app will help you plan your time: it tells which places to visit, what kind of food is worth a try, as well as activities in the city, where you can take part. Once you specify your preference, the app will generate personalized quests through the city for you. It will make sure that your visit was informative and interesting: during the passage of quests you will answer questions and take photos to earn bonus points. With the app you can find a number of other parties and unite for joint assignments. The application also provides an opportunity to tell about their adventures in social networks.

Features

- Loading quest details from a server based on user preferences

- Hidden tasks can be discovered when the user is walking around the city

- Location tracking through GPS

- Supports indoor locations through iBeacons

- Take Photos

- User travels to the location specified in the task and is asked to either take a photo or answer a question

- Share photos to Facebook/Twitter

Technologies

- REST, HTTP, Web API

- Python Flask

- Mobile Development

- Location, GPS

- iBeacons

Team

|

|

|

|

|

Irina Camilleri (TUM) |

Stefan Kofler (TUM) |

Mark Geller (SPbAU) |

Elizaveta Tretyakova (SPbAU) |

Screenshots

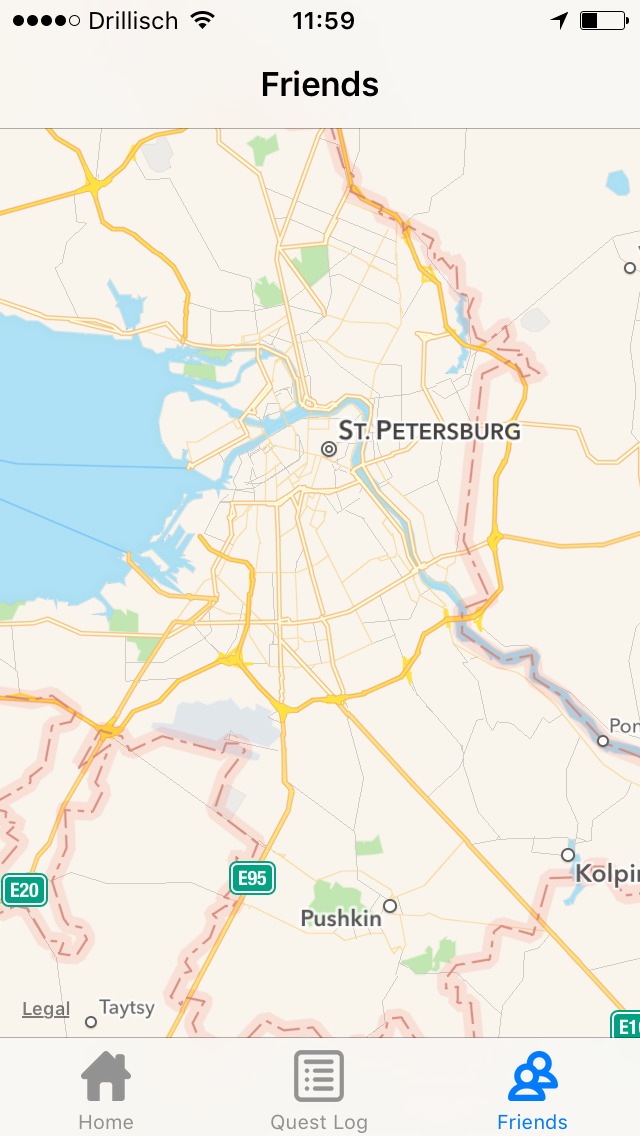

|

|

|

|

|

| Users can view the map of other travelers nearby also using the app | To complete a task a user either has to take a picture or answer a question | Share photo log to social media | User starts/continues a quest which includes categories of tasks he/she is interested in | Quests are made up of a list of tasks |

Trailer

Supervisors

|

|

|

(TUM) |

(JetBrains, SPbAU) |

Assistants

|

|

|

|

(TUM) |

Mark Zaslavsky (SPbAU) |

Vladimir Chernokulsky (SPbAU) |